ML Fundamentals

We investigate efficient ways to address optimize the learning process of ML-based systems by investigating novel methods in multi-task learning.

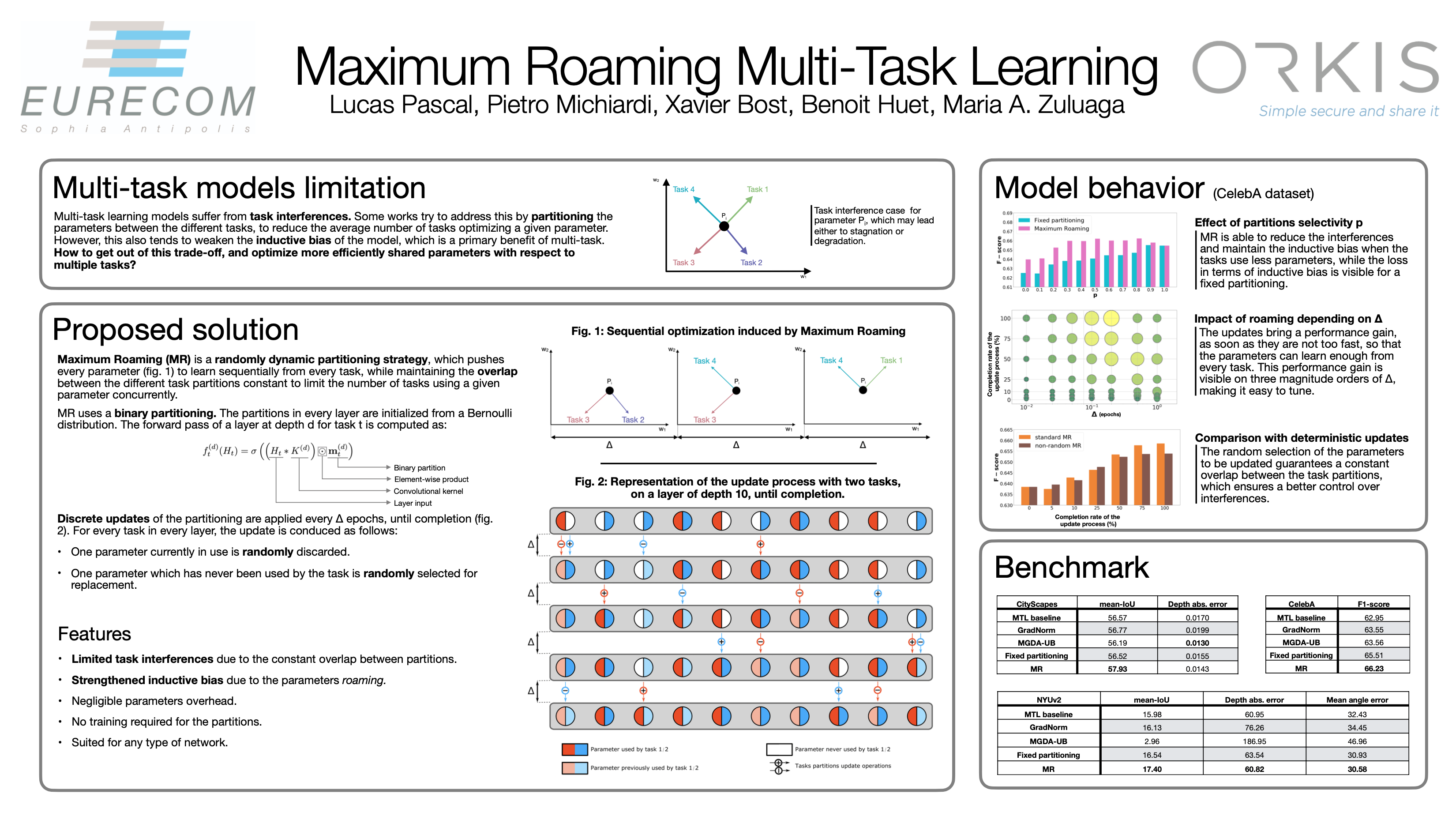

Project: Maximum Roaming Multi-Task Learning

We have proposed Maximum Roaming (MR) multi-task learning, a randomly dynamic partitioning strategy, which pushes every parameter to

learn sequentially from every task, while maintaining the overlap between different task partitions constant to limit the number of tasks

concurrently using a given parameter. This work has been recently presented at AAAI.

We have proposed Maximum Roaming (MR) multi-task learning, a randomly dynamic partitioning strategy, which pushes every parameter to

learn sequentially from every task, while maintaining the overlap between different task partitions constant to limit the number of tasks

concurrently using a given parameter. This work has been recently presented at AAAI.

All code is available in Github

Students

Lucas Pascal (funded by a CIFRE PhD)

Publications

L Pascal, P Michiardi, X Bost, B Huet, MA Zuluaga. Maximum Roaming Multi-task Learning In: Proceedings of the 35th AAAI International Conference on Artificial Intelligence, February 2-9, 2021